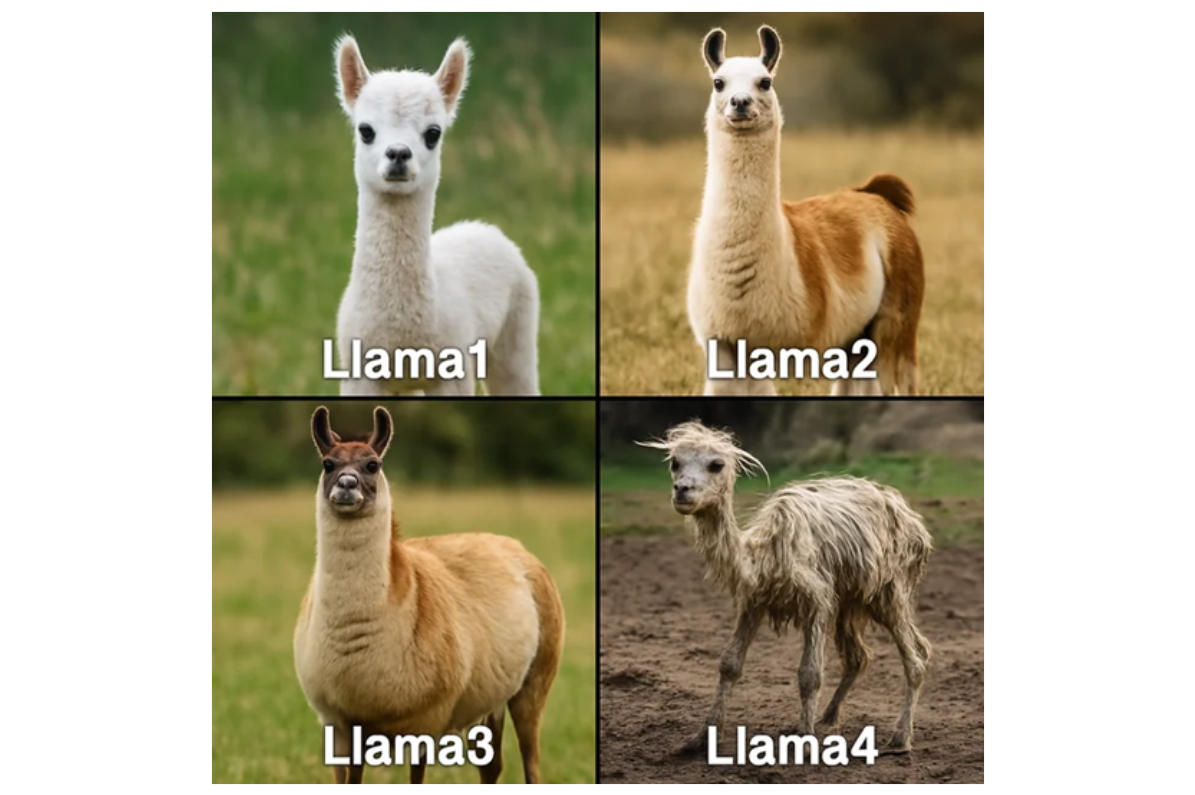

Margin of Safety #10: Llama 4 Looked Great on the Leaderboard. Why Doesn’t It Always Feel That Way?

Jimmy Park, Kathryn Shih

April 15, 2025

- Blog Post

Llama 4 shows the gap between benchmark success and real-world utility

Beyond the benchmarks: What the latest Llama release tells us about AI evaluation, open source, and the race for capability.

Another week, another major AI model release to shake things up. Meta recently released the first two models from the new Llama 4 family, advertising impressive benchmark scores and a familiar-looking mixture-of-experts architecture. On both paper and initial leaderboards, it looked like another step of quality progress for open(ish)-weight models.

And yet… the initial buzz has been tempered by reports of mixed quality and experiences that don’t quite match the leaderboard highs. What’s going on? Some of this seems traceable to specific choices Meta made in it quality evaluation, but it also highlights a bigger, industry-wide issue: our current methods for evaluating AI models struggle to fully predict real-world usefulness.

Unpacking the Llama 4 Launch: Benchmark Games or Growing Pains?

So, what drives this gap between benchmark performance and usefulness? Several factors are potentially at play.

One clear factor was that some benchmarks did not reflect the released model. For the popular LMArena leaderboard, it’s emerged that Meta submitted a special, non-public “experimental” version of Maverick. This version was specifically tuned for the chat arena use case; qualitatively, it’s highly verbose and prone to using stylistic flourishes and emojis to charm voters. While Meta acknowledged this version was experimental, their initial opacity drove community blowback. LMArena has now banned such speciality tuned models.

This situation highlights a common dynamic related to tuning trade-offs. It’s often possible to improve a model’s abilities on a single dimension (such as conversational chat) by sacrificing performance on other tasks. Human review of some of the LMarena chat responses points at this; the highly scoring version of Maverick would likely struggle in other contexts. Examples here and here – where Maverick has style but isn’t correct in answering a simple question (compared to Claude).

Potentially worse is the possibility of Meta “teaching to the test.” Anonymous allegations surfaced suggesting Meta tuned Llama 4 specifically on answers to common benchmark questions – this is akin to giving students the exam questions beforehand! It drives substantial performance gains that don’t replicate outside of the benchmark (or exam). Plus, the MATH-P benchmark1, which slightly alters a common math benchmark in order to defeat models overly reliant on rote memorization, shows an somewhat unusually sharp performance drop for Llama4. However, Ahmad Al-Dahle, Meta’s VP of Generative AI, has clearly denied that Llama4 was trained on benchmark answers; this makes us think it’s premature to believe this occured.

However, even without deliberate manipulation, systemic issues can lead to accidental training. Popular benchmark datasets are increasingly scattered online and inevitably find their way into the massive web crawls used for pre-training, leading to accidental “learning the test.” Moreover, the prominence of certain benchmarks can naturally incentivize labs to focus development efforts on the capabilities those benchmarks reward, potentially at the expense of other valuable skills. This offers partial explanations for the observed benchmark over-performance without requiring any specific shenanigans on the part of Meta.

Finally, everyone should be aware of the potential for benchmark cherry-picking. With a vast landscape of available benchmarks, developers will be naturally tempted to highlight benchmarks where their model excels — or that at least minimize underperformance. Highlighting only these favorable results in press releases can create a misleadingly positive impression by biasing reporting towards benchmarks that reflect outlier luck on testing rather than true outlier performance.

The Bigger Problem: Benchmarks vs. Reality

The Llama 4 situation is really a symptom of a larger ailment in the AI field. The core issue is that standard academic benchmarks are often inadequate for predicting how well a model will perform for your specific needs.

Your best summer intern probably couldn’t ace a comprehensive exam covering the breadth of human knowledge, yet this is a cutting edge research benchmark. Yet that intern can learn your company’s specific jargon, adapt to your workflows, understand implicit context, and handle the weird edge cases unique to your business easier than even a top-scoring LLM. This doesn’t render broad benchmarks useless, but it clearly shows they are not a direct proxy for success in a specific, real-world role. Readers who aren’t familiar with Claude (or Gemini) playing Pokemon can look there for a practical example of the gap between LLMs and humans: LLMs famously struggle with the classic Nintendo game, despite the ability of 8 year old children to rapidly master it.

This leads to a crucial takeaway, echoed across industry blogs and practitioner forums: robust evaluation (‘eval’) tailored to your specific use case is no longer optional; it’s a core competency. You need to assess models not just on standard benchmarks, but on how they perform on your tasks, covering both the typical “happy path” scenarios and, critically, the inevitable corner cases.

Why Use Cases Defy Easy Benchmarking

Aligning benchmarks with real-world value is incredibly difficult for several fundamental reasons. One major challenge is driven by corner cases. Real-world success often hinges on successfully handling of unusual inputs or situations specific to a particular domain, something benchmarks struggle to capture comprehensively. Creating a benchmark that covers exhaustive corner cases across many domains broadly implies a benchmark that is impossibly large. Worse, how many companies treat their unique corner cases as a proprietary business advantage, making it hard to include them in public benchmarks.

Another hurdle is the difficulty of measuring the unquantifiable. Many desirable model behaviors, such as establishing rapport in a sales conversation or conveying the right empathetic tone in customer service, resist easy numerical scoring. While methods like human evaluation exist, fully simulating the nuanced expectations of specific contexts and roles remains a persistent challenge.

And these flaws in benchmarking aren’t just academic — they ripple outward, influencing how companies build, deploy, and invest in AI systems. And as Llama 4’s mixed reception shows, the consequences aren’t limited to individual users. They extend into the economic and national security questions that now define the competitive landscape of open-weight AI.

Economic Implications and Open Source Questions

The Llama 4 narrative raises economic and strategic questions for the broader AI ecosystem. The open-source AI race increasingly resembles a high-stakes derby. Meta introduced Llama 4 hoping to sprint ahead, but stumbled out of the gate — and DeepSeek, once a dark horse, is suddenly pulling ahead. In an era of rising digital nationalism, depending on a Chinese model like DeepSeek for frontier AI starts to feel less like pragmatic engineering and more like strategic risk-taking. Will that translate into broader challenges for strategies reliant on open source models?

This situation fuels a provocative question regarding competence versus chicanery. Llama 4’s architecture has a similar MoE architecture to DeepSeek r1, yet DeepSeek appears to substantially outperform in the field. Is DeepSeek’s team simply more effective, or did they gain an edge through methods Meta is unwilling or unable to employ, such as the rumored incorporation of ChatGPT outputs into their training? If a competence gap is the answer, Meta will have to reevaluate its approach to AI execution. But if ethically questionable shortcuts are a key driver of the performance gap, it casts a shadow over the prospects of fair competition in open source.

These uncertainties translate directly into business model risk. Companies betting heavily on open source, especially if they believe the cutting edge might be tainted by questionable data practices, need a clear strategy. This might involve focusing on more commoditized use cases where “good enough” performance suffices, or accepting that access to the absolute highest tier of capabilities from purely open, ethically sourced models might be limited in the near future.

Looking Ahead: Evals Matter More Than Ever

The Llama 4 launch, with its mix of genuine advancement and confusing evaluation signals, underscores a critical truth: we’re moving beyond the era where a simple leaderboard score tells you what you need to know.

The models are getting incredibly complex, and the ways they succeed or fail in the real world are becoming more nuanced. Relying solely on public benchmarks is becoming increasingly risky. The focus must shift towards rigorous, context-aware, multi-dimensional evaluation tailored to specific applications. Understanding how to evaluate, what to evaluate, and recognizing the inherent limitations of current benchmarks isn’t just a technical exercise – it’s fundamental to building reliable, effective, and trustworthy AI systems.

What are your thoughts? How is your organization approaching AI model evaluation beyond standard benchmarks? Are you or your friends building the next AI benchmark? Let us know in the comments.

1 You can see benchmark results here: https://math-perturb.github.io

Image Credit: First spotted by these authors on reddit

Stay tuned for more insights on securing agentic systems. If you’re a startup building in this space, we would love to meet you. You can reach us directly at: kshih@forgepointcap.com and jpark@forgepointcap.com.

This blog is also published on Margin of Safety, Jimmy and Kathryn’s substack, as they research the practical sides of security + AI so you don’t have to.