Margin of Safety #6: Agentic Disruption

Jimmy Park, Kathryn Shih

March 4, 2025

- Blog Post

Innovator’s dilemma as applied to agentic adoption

AI is currently flooding cybersecurity. SOC automation startups alone have raised over $150M in the last 12 months, ignoring investments in established SIEM/SOAR players. While many of these efforts focus on delivering sophisticated capabilities to advanced players, the mechanics of AI implementation predict that the most successful efforts may be those that focus on smaller customers and simpler tasks.

This happens because AI is often strongly subject to a quality feedback loop in which better data produces better quality. This feedback loop exacerbates classic technology trends (outlined in Clay Christensen’s Innovator’s Dilemma) in which new, immature technologies gain powerful footholds with small, classically underserved customers – “disruption from the bottom.” AI agents that can leverage bottoms up disruption to gain early quality have an opportunity to convert their early leads into a persistent technical advantage.

To better predict the types of agents that will succeed with this approach, let’s review the details.

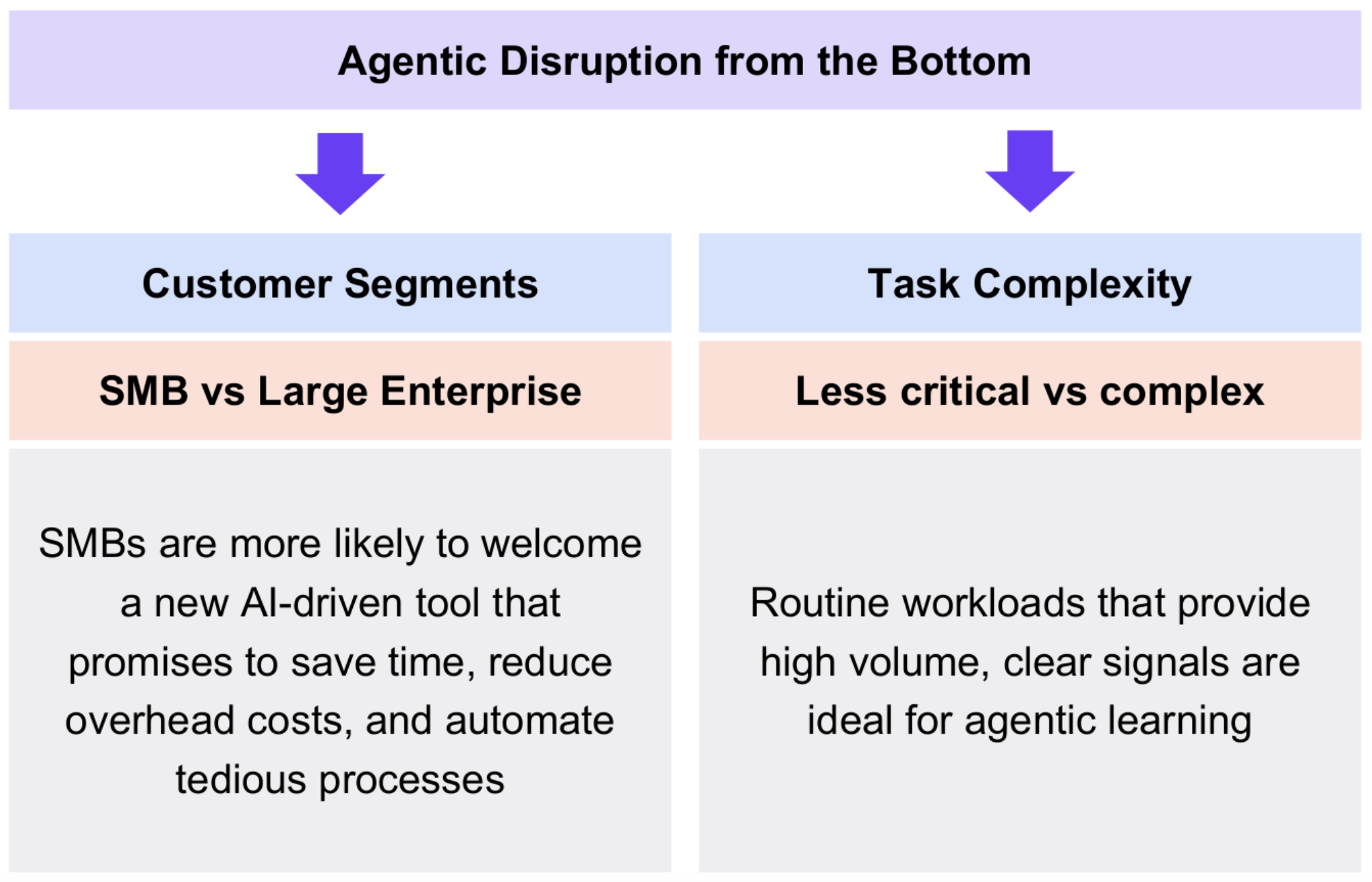

The pattern of “disruption from the bottom” has two primary dimensions:

1. Customer Segments: Smaller organizations, such as small and medium-sized businesses (SMBs), who lack the resources or scale for enterprise-grade solutions.

2. Task Complexity: Less critical, more routine workloads that are easier to automate and carry fewer risks, such as Level 1 SOC analyst tasks before climbing to more advanced levels. A key feature of these tasks should be the ability to deliver a “good enough” solution for a classically underserved userbase.

Let’s unpack these dimensions in more depth, and then look at other angles that fall under the umbrella of bottom-up disruption.

Three Characteristics of Tasks Ripe for Agentic AI

Before we dive deeper into how bottom-up disruption works in cybersecurity, let’s recap key characteristics that make certain tasks or roles especially vulnerable to agentic AI takeover:

1. Highly Observable, often due to a digital substrate

Successful automation is typically contingent on being able to closely observe both the system and its environment; without understanding these, direction action is often futile. While this doesn’t always require a digital substrate – self driving cars work around this problem with heavy investments in sensor technology – it’s typically easiest to roll out agents in digital environments or in contexts where rich sensor networks are already deployed.

2. Well-Defined, Quantitative Success Metrics

Clear, easily measurable targets — such as the ability for remediation to block a known exploit — allow for construction of high quality feedback loops and reduce the risk that the AI adopts undesirable strategies. Conversely, tasks with hard-to-evaluate goals (“negotiate with a ransomware attacker”) can be difficult to even evaluate, let alone optimize.

3. High data volumes, especially for repeated tasks

The more data the system has to learn from, the better it can refine its actions. Logs, vulnerability databases, and exploit signatures provide inputs, but repeated outputs are just as important. If a task occurs once, then measurement is possible but generalizing successes or failures into system improvement is statistically impossible. As similar tasks are repeated, it becomes possible to generalize successes or failures into system improvements. This becomes the core of the positive feedback loop that can allow a disruptive system to begin with a ‘good enough’ entry level solution and grow that into a best-in-class technical moat.

What drives quantitative success metrics?

There are a few tricks for finding tasks that can have strong quantitative success metrics.

One is to look for tasks where an AI engine can propose solutions within a constrained domain, and those solutions can then be vetted by other methods. In this architecture, hallucination becomes creativity – a feature, not a bug – while the other methods ensure that the creativity is vetted and pressure tested. In many ways, coding partially checks the box since a compiler or interpreter can provide an initial (albeit incomplete) evaluation of solution correctness.

Another is to look for tasks that avoid common pitfalls, such as strong reliance on soft skills, negotiation, and personal rapport dominate. Oftentimes, tasks with these are both less repeatable and harder to measure – each interaction is more of a one off and it can be difficult to quantify what a “good” result is.

Bottom-up disruption

SMB Adoption

Small and medium-sized businesses often lack robust in-house expertise—or the budget to hire top-tier consultants—to manage security or other specialized tasks. Compared to major enterprises that can afford entire security teams or premium consulting services, SMBs are more likely to welcome a new AI-driven tool that promises to save time, reduce overhead costs, and automate tedious processes. For many SMBs, the alternative to an agentic AI solution might simply be no solution at all. After agentic AI proves its capabilities within SMBs—showing a tangible reduction in breaches or an improvement in operational efficiency—it becomes easier to “climb” up to mid-sized and large organizations.

Less Critical, Routine Workloads

Routine workloads that provide high volume, clear signals are ideal for agentic learning. An example is Level 1 SOC tasks that often involve repetitive scanning of alerts, logs, or flags that either do or don’t match known threat patterns. Less critical tasks also involve lower risk, so if the AI misses something at Level 1, there’s a chance to catch the error in subsequent levels of review. Lastly, there is simply less resistance. Senior analysts may be more accepting of an AI that filters out noise, rather than one that attempts to replace complex forensic analysis or threat hunting (which might be seen as encroaching on their domain).

From here, agentic AI can progress to handle increasingly advanced functions—like deeper threat detection (Level 2 or 3), incident containment, and eventually complex investigative analysis or Level 4 tasks.

Under-Regulated Domains

Highly regulated fields can create daunting compliance barriers to new AI solutions, as the bar for “good enough” may be very high. In contrast, less regulated niches (or smaller players within regulated markets that have fewer compliance complexities) may adopt agentic AI faster, enabling those solutions to mature before tackling high-compliance contexts.

Looking ahead

Eventually, as agentic AI matures, it won’t remain confined to the bottom. Its reach will extend into larger organizations and sophisticated, mission-critical workloads—including advanced threat hunting, real-time incident containment, and strategic decision-making. By that point, AI will have evolved far beyond the “novice” label, supported by extensive training data, tested integrations, and a track record of reliability in lower-risk scenarios.

This trajectory is not unique to cybersecurity. We’ve seen it play out with cloud computing, open-source software, and countless other revolutions in tech. The future of agentic AI across any domain is likely to follow this same arc: start small, gain traction where needs are pressing but expertise is scarce, refine continuously, and then rise to displace—or at least augment—longstanding incumbents and high-tier professionals.

Stay tuned for more insights on securing agentic systems. If you’re a startup building in this space, we would love to meet you. You can reach us directly at: kshih@forgepointcap.com and jpark@forgepointcap.com.

This blog is also published on Margin of Safety, Jimmy and Kathryn’s substack, as they research the practical sides of security + AI so you don’t have to.