Margin of Safety #1: Securing Agentic AI

Jimmy Park, Kathryn Shih

January 28, 2025

- Blog Post

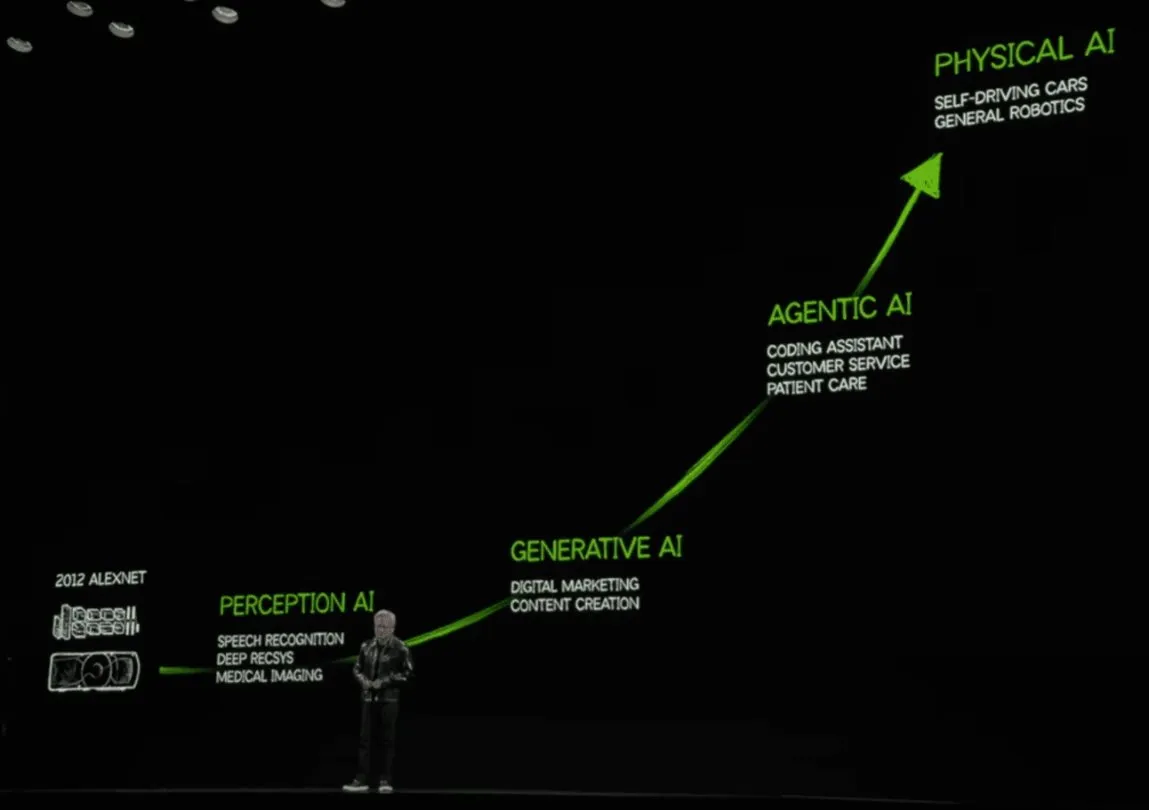

“AI agents are a multi-trillion dollar opportunity. ”

Jensen Huang CEO of Nvidia at CES 2025

TLDR: Ask your agentic provider to secure the agent they are selling

Agentic AI—intelligent systems capable of autonomously planning and executing complex tasks—has captured the imagination of startups, enterprises, and investors alike thanks to breakthroughs in underlying AI methods and planning and reasoning capabilities in the most advanced LLMs. While these systems unlock unprecedented efficiency and innovation, they also raise new security risks.

One critical concern is observability outside of platforms. Even when agentic platform providers like LangChain or Crew.ai offer internal monitoring, agent interactions across multiple platforms remain opaque, allowing agents to behave unexpectedly or maliciously. Jailbreaking—tricking models into unintended actions—is another lurking threat. Adding complexity, many agentic contexts involve a series of reasonable actions that can be combined to unreasonable effect.

For example, a customer service agent can reasonably review customer order histories and offer standard make –good offers to customers who have experienced lost orders or other service failures. But a savvy attacker could attempt to trick an agent into responding to the loss of a $10 order with a $500 credit. Each step – looking up the order, determining damages and the customer’s lifetime value, and issuing a $500 credit – could be individually reasonable within the scope of some customer service workflows, but they aren’t reasonable in combination. It’s critical that monitoring tools provide the capabilities to not only monitor individual actions, but to also to detect behavioral abnormalities that are only obvious within a broader context.

Trust and safety layers from major providers (e.g., OpenAI, Google) can mitigate some risks, but they remain inconsistent against sophisticated exploits. A further challenge is the lack of Agentic API standardization: without unified telemetry and observability APIs, it’s difficult to monitor and respond to threats across diverse agent environments.

Buyers of agentic solutions should demand comprehensive vulnerability assessments, real-time monitoring, and transparent reporting of AI model lineage and data usage. Providers must secure data pipelines to prevent sensitive information leakage and should implement robust access controls to thwart unauthorized manipulation of agent behaviors. Culture is key: teams need to adopt continuous monitoring, rapid incident response, and a proactive stance on emergent threats.

Stay tuned for more insights on securing agentic systems. If you’re a startup building in this space, we would love to meet you. You can reach us directly at: kshih@forgepointcap.com and jpark@forgepointcap.com.

This blog is also published on Margin of Safety, Jimmy and Kathryn’s substack, as they research the practical sides of security + AI so you don’t have to.